Hardware acceleration enables advanced, power-efficient computing on your system or network for cutting-edge applications requiring low latency.

Sensor Processing

Network

Compute

Sensor Acceleration

Sensor Acceleration

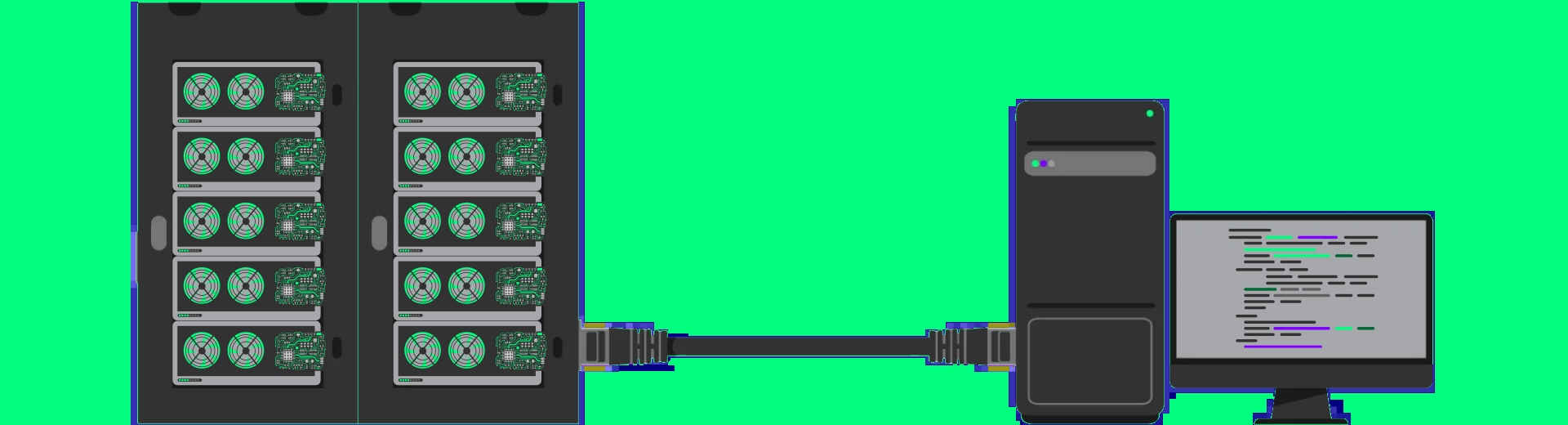

Accelerators handle real-time processing of large data, such as high-resolution videos and images. In high-frequency applications, accelerators provide a seamless bridge between digital and RF.

FPGAs and SoCs can handle ultra-high data ingress and real-time processing for SWaP (Size, Weight and Power) constrained application environments.

Network Acceleration

Network Acceleration

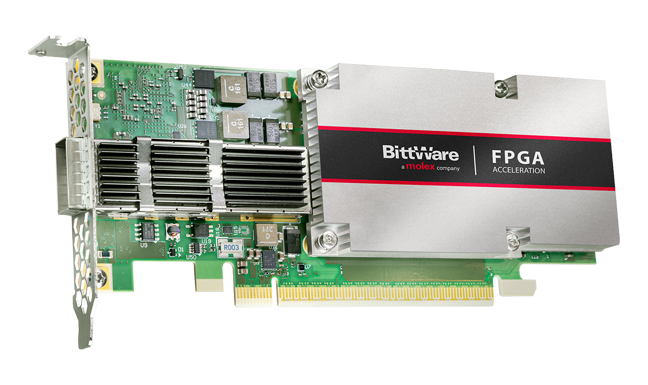

Network accelerators are designed to increase the routing speed (ability to route many streams in parallel) with hardware adaptable datapaths for maximum performance.

FPGA-based hardware acceleration fall into one of four categories:

Functions evolving too quickly for ASIC solutions

Functions too new to harden into ASIC

Quickly emerging proprietary functions that ran prior on CPUs

Too specialized to justify ASIC

Compute Acceleration

Compute Acceleration

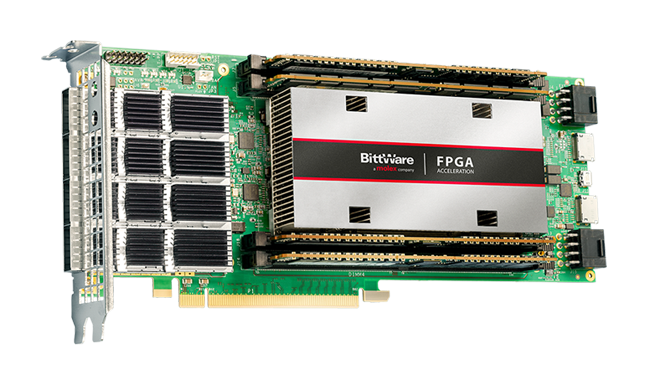

AI accelerators are designed to accelerate machine learning computations and improve performance, reduce latency, and lower the cost of deploying machine learning applications.

The ecosystem of FPGA and ASIC-based AI solutions is optimized for scalable inference, helping you scale up to datacenter-grade sensor processors or maximize edge performance per watt.

Philippines

Philippines